April 4, 2025 | 7 min read

By Meenesh Bhimani – Chief Medical Officer

Generative AI is transforming healthcare, but ensuring safety and reliability remains a critical challenge. We are excited to introduce a groundbreaking approach to AI safety validation called Real World Evaluation of Large Language Models in Healthcare (RWE-LLM), developed by Hippocratic AI.

What is RWE-LLM?

The RWE-LLM framework is a comprehensive safety validation methodology designed to bridge the gap between theoretical AI safety principles and real-world deployment. Unlike conventional benchmarking approaches that rely heavily on input data quality, RWE-LLM focuses on rigorous output testing across diverse clinical scenarios.

The framework leverages the expertise of 6,234 US licensed clinicians (5,969 nurses and 265 physicians) who evaluated 307,038 unique calls with our generative AI healthcare agent. Through structured error management and continuous feedback, the RWE-LLM framework has enabled significant improvements in AI safety. Clinical accuracy rates improved from ~80.0% (pre-Polaris) to 96.79% (Polaris 1.0), 98.75% (Polaris 2.0), and 99.38% (Polaris 3.0).

One of the most remarkable aspects of the RWE-LLM framework is that it provides a roadmap to deploying generative AI healthcare agents at scale in order to support healthcare abundance. By employing structured error management and a continuous feedback loop, the framework supports ongoing safety improvements even in auto-pilot deployment scenarios.

Background: The deployment of artificial intelligence (AI) in healthcare necessitates robust safety validation frameworks, particularly for systems directly interacting with patients. While theoretical frameworks exist, there remains a critical gap between abstract principles and practical implementation. Traditional LLM benchmarking approaches provide very limited output coverage and are insufficient for healthcare applications requiring high safety standards.

Objective: To develop and evaluate a comprehensive framework for healthcare AI safety validation through large-scale clinician engagement.

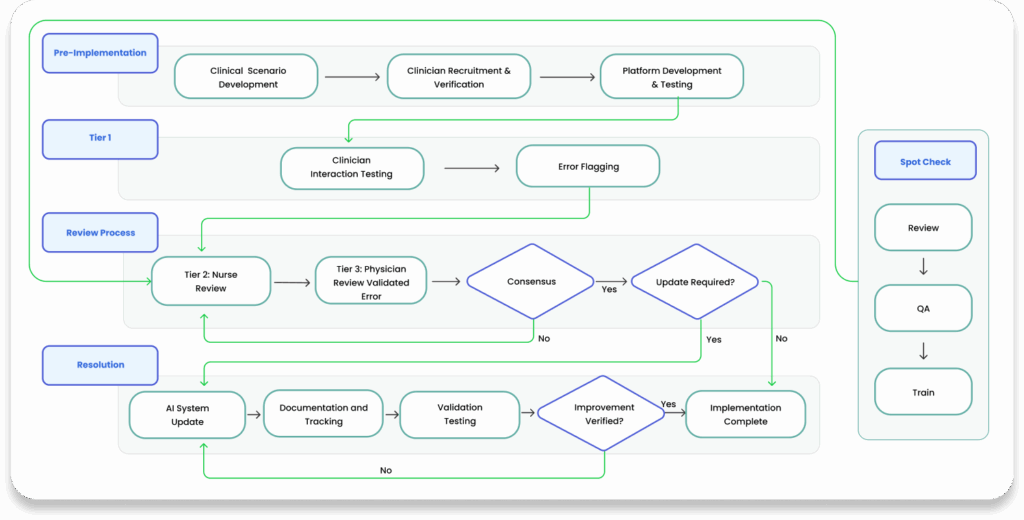

Methods: We implemented the RWE-LLM (Real World Evaluation of Large Language Models in Healthcare) framework, drawing inspiration from red teaming methodologies while expanding their scope to achieve comprehensive safety validation. Our approach emphasizes output testing rather than relying solely on input data quality across four stages: pre-implementation, tiered review, resolution, and continuous monitoring. We engaged 6,234 US licensed clinicians (5,969 nurses and 265 physicians) with an average of 11.5 years of clinical experience. The framework employed a three-tier review process for error detection and resolution, evaluating a non-diagnostic AI Care Agent focused on patient education, follow-ups, and administrative support across four iterations (pre-Polaris and Polaris 1.0, 2.0, and 3.0).

Results: Over 307,000 unique calls were evaluated using the RWE-LLM framework. Each interaction was subject to potential error flagging across multiple severity categories, from minor clinical inaccuracies to significant safety concerns. The multi-tiered review system successfully processed all flagged interactions, with internal nursing reviews providing initial expert evaluation followed by physician adjudication when necessary. The framework demonstrated effective throughput in addressing identified safety concerns while maintaining consistent processing times and documentation standards. Systematic improvements in safety protocols were achieved through a continuous feedback loop between error identification and system enhancement. Performance metrics demonstrated substantial safety improvements between iterations, with correct medical advice rates improving from ~80.0% (pre-Polaris), to 96.79% (Polaris 1.0), to 98.75% (Polaris 2.0) and 99.38% (Polaris 3.0). Incorrect advice resulting in potential minor harm decreased from 1.32% to 0.13% and 0.07%, and severe harm concerns were eliminated (0.06% to 0.10% and 0.00%).

Conclusions: The successful nation-wide implementation of the RWE-LLM framework establishes a practical model for ensuring AI safety in healthcare settings. Our methodology demonstrates that comprehensive output testing provides significantly stronger safety assurance than traditional input validation approaches used by horizontal LLMs. While resource-intensive, this approach proves that rigorous safety validation for healthcare AI systems is both necessary and achievable, setting a benchmark for future deployments.

Read the Full Published Paper: https://www.medrxiv.org/content/10.1101/2025.03.17.25324157v1

Read the full paper to explore the complete methodology, findings, and implications of the RWE-LLM framework. Stay tuned for more insights as we continue to refine and expand this groundbreaking framework.

Regards,

Dr. Meenesh Bhimani Co-founder

& Chief Medical Officer and

The Hippocratic AI Team